Blog Series: Exploring the Power of AI

This is part 6 in an ongoing series to acquaint people with AI.

Part 1: The AI Revolution: Why You Should Care and Why You Should Trust Us

Part 2: Demystifying AI: What It Is and Why It Matters

Part 3: Intelligent Innovations: AI in daily life

Part 4: The Future of Work: AI’s Role in Professional Settings

Part 5: Kickstart Your AI Journey: Essential Tools and Resources to Get Started

Building Responsible AI: Ethical Challenges and Solutions

Introduction

Ethics in AI is not just important for researchers and developers, but for everyone who uses it. Here are some reasons why:

- Impact on daily life: AI is becoming more and more a part of our day to day actions. We need to understand the concerns and make sure we are addressing them, as well as having our own privacy respected.

- Fairness: AI is only as good as the data it’s trained on, and if it has limited viewpoints in its input data, its output can perpetuate bias. This can only be corrected with a very conscious and deliberate effort. We can impact the input data.

- Privacy: we need to make sure our privacy is being respected, and be aware of who collects (and shares) what information about us.

- Transparency: it’s important for companies to make clear what data is used for what purposes. Only then can we all be accountable.

- Autonomy: When we make decisions based on data, we need to make informed decisions with knowledge of the source of data. Is this the opinion of, say, an expert who has done this many times before, or a LLM which is predicting the next words in a phrase?

- Consequences: standards that are established now will inform standards going into the future. In a new field, it’s especially important to try to get this right.

I urge you to read about Microsoft’s six principles of responsible AI. They make a really strong case for: fairness, reliability and safety, privacy and security, inclusiveness, transparency, and accountability.

Discussion

Practically speaking, what does this mean to the average user of AI? I propose three questions to ask:

- Where did the model’s data come from?

- Where is my data going?

- Should I give credit?

Source of data

Where did the model’s data come from? Did it scrape content from creators who have not given permission? Did it duck behind paywalls? Steve Little has an example, what if Steven King gave permission for a website (and only that website) to share the first chapter of one of his books, and a model uses the data for training? Late last year, the New York Times (and later other newspapers) sued OpenAI and Microsoft for using its published work as input. (Gift NYT link) (As far as I can tell, the matter is still open.) We ought to keep awareness of which models are sourcing ethically.

Data sharing

What happens to data that I share with the model? You need to be aware of a model’s privacy policies before sharing your confidential information with it – it’s too easy to share financial numbers and ask it to make you a budget! In some, perhaps all, of the models you can turn the option off. In ChatGPT, for example, turn Off the Setting to Improve the model for everyone.

Claude’s owner Anthropic says that it does not use conversations for training unless you explicitly opt in and deletes prompts within 90 days.

I couldn’t find Microsoft’s policy for Copilot in this page.

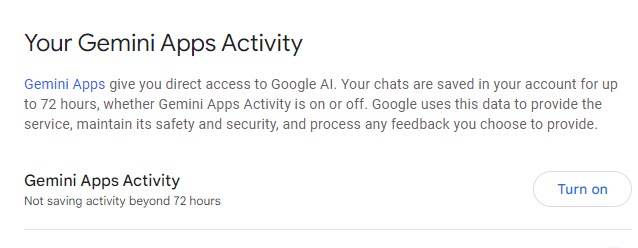

Google lets you control whether Gemini saves your prompts using Gemini Apps Activity.

Disclosure

While norms are still being formed, it’s generally accepted that you do not have to give an AI credit as a source of your data. However, and this is an important but, that does not mean that those who absorb your content do not deserve to know when you’ve used AI to create the content. Groups everywhere are discussing and debating the level of AI involvement necessary to cross the threshold into required disclosure. In this blog, like at my employer, I err on the site of overexposure: If I have used it at all, I let people know. In Worldwide Learning at Microsoft, if we have used AI for the smallest part of our content, we tag the Microsoft Learn page with an AI tag, which creates a disclosure on the webpage.

Especially in these days of AI fakes being profligate and it being hard to determine if an image is real or generated, it’s important to maintain trust by revealing your sources.

Challenge

Your challenge is to seek out different opinions and/or policies on AI disclosure. Let us know where YOU think the line should be, in the comments below!

Summary

In summary, ethics in AI is about ensuring that the technology develops in a way that is fair, transparent, and beneficial to all members of society. It’s about safeguarding rights, promoting equality, and maintaining trust in the systems that increasingly shape our world.

Further resources

- Transparency and Explainability: Timnit Gebru: Gebru’s work emphasizes the importance of transparency and has been influential in discussions about AI ethics. You can read more about her work in the article “How Timnit Gebru’s Exit from Google Changed AI Ethics” by Wired.

- Fairness and Bias Mitigation: Cathy O’Neil: O’Neil’s book Weapons of Math Destruction discusses the dangers of biased algorithms. The book is available for purchase or preview on Amazon. She also writes extensively on this topic on her blog.

- Accountability and Governance: Stuart Russell: Russell has written about the need for AI alignment with human values. His book Human Compatible provides a deeper dive into this topic. You can find it on Amazon. He also discusses these ideas in interviews like this one on Wired.

- Privacy and Data Security: Shoshana Zuboff: Zuboff’s The Age of Surveillance Capitalism is a critical analysis of how AI affects privacy. The book is available on Amazon. You can also read some of her articles and interviews on her website.

- Human-Centric AI Design: Fei-Fei Li: Li advocates for a human-centered approach in AI. You can read more about her views in articles like “How to Make AI That’s Good for People,” published by Wired. She also gave a TED Talk on this topic, which you can watch here. [Janet’s note: the TED talk does not seem to exist any longer, but her speaker page is here.]

- Sustainability and Environmental Impact: Kate Crawford: Crawford’s work on the environmental impact of AI is discussed in her book Atlas of AI. It is available on Amazon. She also co-authored an article, “AI and the Environmental Crisis,” which provides insights into this issue.

- Public Engagement and Education: Yoshua Bengio: Bengio emphasizes the importance of public understanding of AI. He has spoken on this topic in various forums, such as in his interview with IEEE Spectrum. His work and views are also discussed in this Nature article.

Disclosure

AI was used in several places in this post: to create the title, to create the outline, for the further resources. Main image created by Bing Image Creator.

Pingback: Exploring the Power of AI: A Journey to Empowerment | janeteblake